- Getting Started

- Setup and Configuration

- Automation Projects

- Dependencies

- Types of Workflows

- Control Flow

- File Comparison

- Automation Best Practices

- Source Control Integration

- Debugging

- Logging

- The Diagnostic Tool

- Workflow Analyzer

- About Workflow Analyzer

- ST-NMG-001 - Variables Naming Convention

- ST-NMG-002 - Arguments Naming Convention

- ST-NMG-004 - Display Name Duplication

- ST-NMG-005 - Variable Overrides Variable

- ST-NMG-006 - Variable Overrides Argument

- ST-NMG-008 - Variable Length Exceeded

- ST-NMG-009 - Prefix Datatable Variables

- ST-NMG-011 - Prefix Datatable Arguments

- ST-NMG-012 - Argument Default Values

- ST-NMG-016 - Argument Length Exceeded

- ST-NMG-017 - Class name matches default namespace

- ST-DBP-002 - High Arguments Count

- ST-DBP-003 - Empty Catch Block

- ST-DBP-007 - Multiple Flowchart Layers

- ST-DPB-010 - Multiple instances of [Workflow] or [Test Case]

- ST-DBP-020 - Undefined Output Properties

- ST-DBP-021 - Hardcoded Timeout

- ST-DBP-023 - Empty Workflow

- ST-DBP-024 - Persistence Activity Check

- ST-DBP-025 - Variables Serialization Prerequisite

- ST-DBP-027 - Persistence Best Practice

- ST-DBP-028 - Arguments Serialization Prerequisite

- ST-USG-005 - Hardcoded Activity Properties

- ST-USG-009 - Unused Variables

- ST-USG-010 - Unused Dependencies

- ST-USG-014 - Package Restrictions

- ST-USG-017 - Invalid parameter modifier

- ST-USG-020 - Minimum Log Messages

- ST-USG-024 - Unused Saved for Later

- ST-USG-025 - Saved Value Misuse

- ST-USG-026 - Activity Restrictions

- ST-USG-027 - Required Packages

- ST-USG-028 - Restrict Invoke File Templates

- ST-USG-032 - Required Tags

- ST-USG-034 - Automation Hub URL

- Variables

- Arguments

- Imported Namespaces

- Coded automations

- Introduction

- Registering custom services

- Before and After contexts

- Generating code

- Generating coded test case from manual test cases

- Troubleshooting

- Trigger-based Attended Automation

- Object Repository

- The ScreenScrapeJavaSupport Tool

- Extensions

- About extensions

- SetupExtensions tool

- UiPathRemoteRuntime.exe is not running in the remote session

- UiPath Remote Runtime blocks Citrix session from being closed

- UiPath Remote Runtime causes memory leak

- UiPath.UIAutomation.Activities package and UiPath Remote Runtime versions mismatch

- The required UiPath extension is not installed on the remote machine

- Screen resolution settings

- Group Policies

- Cannot communicate with the browser

- Chrome extension is removed automatically

- The extension may have been corrupted

- Check if the extension for Chrome is installed and enabled

- Check if ChromeNativeMessaging.exe is running

- Check if ComSpec variable is defined correctly

- Enable access to file URLs and Incognito mode

- Multiple browser profiles

- Group Policy conflict

- Known issues specific to MV3 extensions

- List of extensions for Chrome

- Chrome Extension on Mac

- Group Policies

- Cannot communicate with the browser

- Edge extension is removed automatically

- The extension may have been corrupted

- Check if the Extension for Microsoft Edge is installed and enabled

- Check if ChromeNativeMessaging.exe is running

- Check if ComSpec variable is defined correctly

- Enable access to file URLs and InPrivate mode

- Multiple browser profiles

- Group Policy conflict

- Known issues specific to MV3 extensions

- List of extensions for Edge

- Extension for Safari

- Extension for VMware Horizon

- Extension for Amazon WorkSpaces

- SAP Solution Manager plugin

- Excel Add-in

- Studio testing

- Troubleshooting

- About troubleshooting

- Assembly compilation errors

- Microsoft App-V support and limitations

- Internet Explorer X64 troubleshooting

- Microsoft Office issues

- Identifying UI elements in PDF with Accessibility options

- Repairing Active Accessibility support

- Validation of large Windows-legacy projects takes longer than expected

Studio user guide

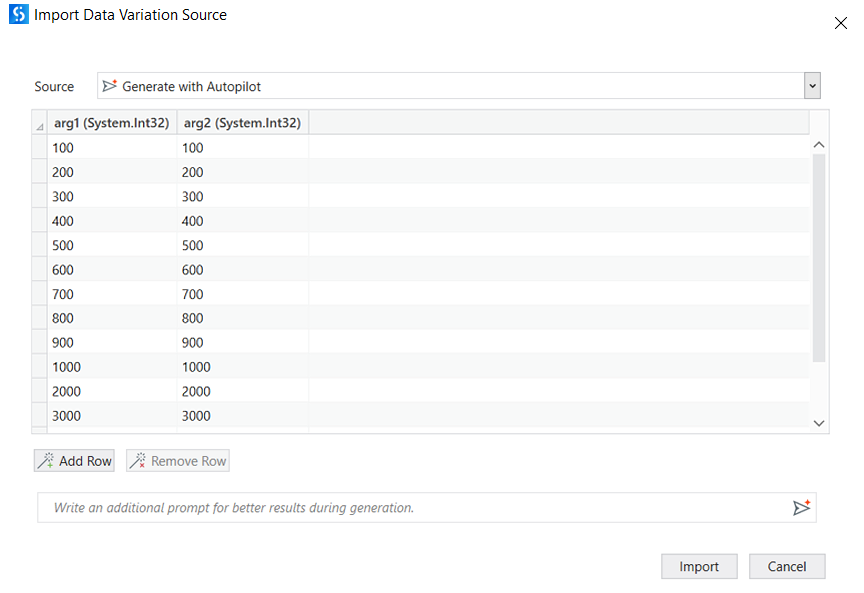

AI-generated test data

You can generate test data that is highly specific for your testing needs, using the AI-powered capabilities offered by Autopilot™. Initially, Autopilot lets you input specific instructions, after which it produces arguments and variables that are relevant to both the instruction and the associated test data set. Additionally, you can instruct Autopilot to generate new arguments for your test data set that are later added to your test case.

Prerequisites

For Autopilot to generate test data, your test case must contain arguments of the types that we currently support. Check the Supported argument types section for the list of argument types that you can include in your test cases.

Supported argument types

The supported argument types for AI-generated test data are:

StringInt32,Int64DoubleDecimalBooleanCharByteSByteUInt16,UInt32,UInt64Single

Generate test data for test cases

To generate test data with Autopilot for a low-code or coded test case:

- Open the Test Explorer.

- Right-select the test case for which you want to generate test data, and select Add Test Data.

- From the Source dropdown list, select Generate with Autopilot.

- Enter instructions in the Write down your prompt text field to generate a first round of test data.

- As an optional step, you can enhance the generated test data with additional prompt instructions. If instructed, Autopilot adds more arguments than the current ones. The additional arguments are then added to the test case. Additionally, you can instruct Autopilot to generate a certain number of data variations.

- When you are satisfied with the generated test data, select Import to add the data to your test case.

Figure 1. Dialog box when generating test data using Autopilot

Best practices

Visit Generating synthetic test data to check the recommendations for effectively generating AI-powered test data. The best practices contain tips about:

- How to create arguments for a robust set of data.

- How to use instructions for your preferred data combination methods.

- How to use prompts to customize your data set.