- Getting Started

- Governance

- Source Control

- CI/CD Pipelines

- Feed Management

- Logging

Automation Ops user guide

UiPath® Automation Ops™ - Pipelines

Automation Ops™ - Pipelines provides an easy way to set up a continuous-integration/continuous-delivery system to manage the code of your automation projects in external repositories, such as Github or Azure DevOps.

Pipelines contain a set of steps which rely on automation processes used to alter code in your environment. These processes, also called Pipeline Processes, use the Pipeline activities package. For a user to check these pipeline processes, they must have access to the Orchestrator folder that contains them.

When a pipeline is triggered, a job is started which runs the associated pipeline process using an unattended robot.

Prerequisites

- Robot versions:

- 2021.10 and 2022.4:

.NET Desktop Runtime 6.0.*must be manually installed on the robot machine. - 2022.8 and newer:

.NET Desktop Runtimeis automatically installed with the robot.

- 2021.10 and 2022.4:

- Process particularities:

- The pipeline process must be configured to run as a background process. This is done from the project settings menu in Studio. Learn more about background processes.

- When publishing the pipeline process from Studio to Orchestrator, make sure to also select Include Sources from the Publish Options section. Read more about publishing automation projects.

Configuration

Automation Ops™- Pipelines work by running pipeline processes using unattended robots, this means a specific configuration in Orchestrator is needed before using them. This configuration is called a Pipelines runtime environment.

The basic Orchestrator configuration has a dedicated folder which contains the pipeline processes and a robot account, plus a machine or machine template to run the pipeline jobs.

The robot account created during the quick setup is essential. All the pipelines (Orchestrator jobs) are ran on its behalf. Deleting the robot account causes an invalid pipeline runtime configuration and the need to rerun the quick setup. Deleting the dedicated pipelines folder in Orchestrator breaks all pipelines associated with it.

Initial setup

When setting up Automation Ops™ - Pipelines for the first time, a quick setup window is displayed allowing you to choose the Tenant and the type of machine you want to run the future pipelines with. You can choose between using an existing machine from your environment or automatically create a new serverless machine named "Pipelines robot".

If you choose to create a new serverless machine, make sure there are enough robot units available on your tenant.

As part of the quick setup experience, a new folder named "Pipelines" and the following roles are automatically created:

- Pipelines tenant role

- Pipelines folder role

The pipelines robot gets the following roles assigned automatically:

- Tenant: Pipelines tenant role, Allow to be Automation User, Allow to be Automation Publisher

- Pipelines Folder: Pipelines folder role, Automation User, Automation Publisher

Make sure that the robot account created for pipelines is also assigned to the target Orchestrator folder. It is needed since pipelines operate under that account. For details, refer to Configuring Access for Accounts.

The Run Tests activity runs the tests in the provided Orchestrator folder. The Pipelines robot account publishes the package in the respective folder, but the tests can be run by any robot account in that folder that qualifies for the test run, not only by the Pipelines robot account.

Additionally, the pre-defined pipelines processes are available by default, as presented in the following table:

| Build.and.publish | Clone -> Analyze -> Build -> Publish |

| Copy.package.between.environments | Download package -> Publish package |

| Update.process.from.code | Clone -> Analyze -> Build -> Publish package -> Update process |

| Update.with.tests | Clone -> Analyze -> Run Tests -> Build -> Publish package -> Update process |

| Build.and.promote.with.approval | Clone -> Analyze -> Run Tests -> Build -> Publish package -> Update process -> Approve -> Download package -> Upload package -> Update process |

These default pipelines processes come with their own set of arguments, for example, the Build.and.promote.with.approval has the follwing arguments:

- SkipTesting - Allows you to choose if the test cases are executed or not during the pipeline.

- TestingFolder - The Orchestrator folder where the tests are executed.

- AnalyzePolicy - The governance policy holding the workflow analyzer rules used in the pipeline process. If left empty, the analysis of the project is skipped.

- SkipValidation - Allows you to skip validation before building the package. This value is disabled by default.

- Approver - The email address of the approver of the task created in Action Center.

- FirstOrchestratorUrl - The URL to the Orchestrator where the built package is published.

- FirstOrchestratorFolder - The Orchestrator folder where the built package is published.

- SecondOrchestratorUrl - The URL to the Orchestrator where the built package is published after approval.

- SecondOrchestratorFolder - The Orchestrator folder where the built package is published after approval.

- HaveSamePackageFeed - This field is set as "False" by default. Set it as "True" if first and second environments are using the same package/library feed.

- ProcessName - The name of the process to be updated. Only used if the project is process.

You can also start working with pipelines using the templates available in Uipath Studio and Studio Web (Templates tab).

You can use the following templates:

-

Build and promote with approval pipeline:- Usage: management of an automation project from inception to approval.

- Steps: Clone, Analyze, Run Test, Build, Publish Package, Update Process, Approve, Download Package, Upload Package, and Update Package.

-

Update process from a code line:- Usage: highlights a streamlined procedure for updates and modifications to ongoing processes.

- Steps: Clone, Analyze, Build, Publish Package, and Update Process.

Cloud Serverless robots created in the initial setup are of Standard size. When using the RunTests activity, if it involves an Orchestrator folder with Cloud Serverless robots, make sure that the robots are of Standard size.

When using the Build activity, validate the compatibility requirements between the automation projects you are building and the machine running the process.

For example, a project created using Windows-Legacy or Windows compatibility cannot be built on a Cloud Serverless robot. You must use a Windows based machine instead.

When running a pipeline which generates and publishes a process using Integration Service connections, make sure that all

necessary project folders are committed to your source control provider. For example, it is necessary when initializing git

from Studio, to commit all associated project folders, such as .settings, .project, .tmh.

Creating the first pipeline

After the Orchestrator setup is complete, you must configure the initial integration between Automation Ops™-Pipelines, the GitHub repository holding your code, and the Orchestrator pipelines runtime environment. When doing this integration, you also create the first pipeline.

Follow these steps:

-

In Automation Cloud™, navigate to Automation Ops™ > Pipelines from the left-side navigation bar.

-

Select New Pipeline. If you have the external repository connected to Source Control, then it is automatically connected.

Note:Only one UiPath® Automation Cloud™ organization can be connected to a GitHub organization at the same time.

-

In the Location tab, select the external repository organization, repository, branch, and an automation project (optional). Select Next.

-

In the Pipeline definition tab, select the pipeline process. If your pipeline process contains arguments, you can add their values.

-

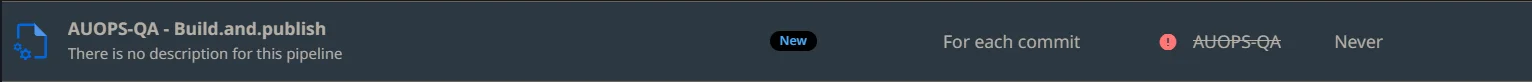

In the Save & run tab, configure the following:

- Project name - Enter a name for the pipeline project. By default, the name is composed from the name of the repository and the name of the pipeline automation project.

- Description - Optionally, add a description.

- Run this pipeline - Select how you want the pipeline to run:

- For each commit - The pipeline automation is triggered every time there is a change in the code in the repository for the selected project.

- I'll run manually - The pipeline automation is triggered manually.

Note:

On manual triggered pipelines, the commit used when a job is started is the latest commit on the folder of the selected

project.jsonfile. It is not the latest commit in the entire repository, if no files in that folder are changed in that commit.

-

Select Save to save the pipeline or Save and run to save and also run the pipeline.

If no specific process from the repository is chosen in step 1 (no automation project selected) and the pipeline is set to be triggered by a commit, the pipeline gets triggered by any commit in the repository.

The ProjectPath argument is populated with the value selected in the Automation project (optional) field from the Location Step from the pipeline configuration.

If the field is left blank, the ProjectPath process argument remains empty. This scenario is can be used for repositories that have only one automation project.

Manually running a pipeline

- In Automation Cloud™ , navigate to Automation Ops™ from the left-side navigation bar.

- Select Pipelines. The available pipelines are displayed.

- Select a pipeline, and then select Start new job. This triggers the pipeline to run and you can notice the progress for each step in real time.

You can also edit the pipeline by selecting Pipeline settings. This will display the pipeline summary, from where you can:

- Edit pipeline - select to make updates on the pipeline. You can only update pipeline name, description, trigger type, and custom pipeline arguments. Location and pipeline definition can not be changed.

- Delete pipeline - select to delete the pipeline (all the information related to the pipeline will be deleted).

Pre-defined pipeline processes

The following table describes the pre-defined pipelines processes that available by default:

| Build.and.publish | Clone -> Analyze -> Build -> Publish |

| Copy.package.between.environments | Download package -> Publish package |

| Update.process.from.code | Clone -> Analyze -> Build -> Publish package -> Update process |

| Update.with.tests | Clone -> Analyze -> Run Tests -> Build -> Publish package -> Update process |

| Build.and.promote.with.approval | Clone -> Analyze -> Run Tests -> Build -> Publish package -> Update process -> Approve -> Download package -> Upload package -> Update process |

The latest versions of the pre-defined pipeline templates are available in the Marketplace.

These default pipelines processes come with the following set of arguments:

- Build.and.promote.with.approval process:

- ProcessName - The name of the process to be updated. Only used if the project is process.

- Approver - The email address of the approver of the task created in Action Center.

- SkipTesting - Allows you to choose if the test cases are executed or not during the pipeline.

- AnalyzePolicy - The governance policy holding the workflow analyzer rules used in the pipeline process. If left empty, the analysis of the project is skipped.

- SkipValidation - Allows you to skip validation before building the package. This value is disabled by default.

- FirstOrchestratorFolder - The Orchestrator folder where the built package is published.

- FirstOrchestratorUrl - The URL to the Orchestrator where the built package is published.

- SecondOrchestratorFolder - The Orchestrator folder where the built package is published after approval.

- SecondOrchestratorUrl - The URL to the Orchestrator where the built package is published after approval.

- TestingFolder - The Orchestrator folder where the tests are executed.

- HaveSamePackageFeed - This field is set as "False" by default. Set it as "True" if first and second environments are using the same package/library feed.

- Build.and.publish

- AnalyzePolicy - The governance policy holding the workflow analyzer rules used in the pipeline process. If left empty, the analysis of the project is skipped.

- SkipValidation - Allows you to skip validation before building the package. This value is disabled by default.

- OrchestratorUrl - The URL to the Orchestrator where the built package is published.

- OrchestratorFolder - The Orchestrator folder where the built package is published.

- Copy.package.between.environments

- PackageName - The name of the package to be copied.

- IsLibrary - Defines if the package is a library or not.

- PackageVersion - The version of the package to be copied.

- SourceOrchestratorFolder - The Orchestrator folder from where the package is copied.

- SourceOrchestratorUrl - The URL to the Orchestrator from where the package is copied.

- DestinationOrchestratorUrl - The URL to the Orchestrator where the package is copied to.

- DestinationOrchestratorFolder - The Orchestrator folder where the package is copied to.

- Update.process.from.code

- ProcessName - The name of the process to be updated. Only used if the project is process.

- AnalyzePolicy - The governance policy holding the workflow analyzer rules used in the pipeline process. If left empty, the analysis of the project is skipped.

- SkipValidation - Allows you to skip validation before building the package. This value is disabled by default.

- OrchestratorUrl - The URL to the Orchestrator where the package to be updated is found.

- OrchestratorFolder - The Orchestrator folder where the package to be updated is found.

- Update.with.tests

- ProcessName - The name of the process to be updated. Only used if the project is process.

- AnalyzePolicy - The governance policy holding the workflow analyzer rules used in the pipeline process. If left empty, the analysis of the project is skipped.

- SkipTesting - Allows you to skip testing before building the package. This value is disabled by default.

- OrchestratorUrl - The URL to the Orchestrator where the package to be updated is found.

- OrchestratorFolder - The Orchestrator folder where the package to be updated is found.

- OrchestratorTestingFolder - The Orchestrator folder where the tests used in the pipeline are found.

There is a compatibility requirement between the automation projects you intend to build and the machine that runs the pipeline process.

The correct mapping is:

- Windows-Legacy project → Build OS: Windows only

- Windows project→ Build OS: Windows only

- Cross-Platform project→ Build OS: Windows or Linux

Default pipeline process arguments

Automation Ops™ provides a set of default arguments for the Pipeline process, presented in the follwong table:

| Name | Direction | Argument type | Description |

|---|---|---|---|

| BuildNumber | In | String | A unique number for each Pipeline Job run. |

| RepositoryUrl | In | String | Url of the repository. Typically used by the Clone activity. |

| CommitSha | In | String | Commit identifier. |

| ProjectPath | In | String | The path to the project.json file. Useful for the Build activity. |

| ComitterUsername | In | String | The username of the person triggering the commit. |

| RepositoryType | In | String | The repository type (such as git). |

| RepositoryBranch | In | String | The used repository branch. |

Viewing pipeline logs

Logs are generated for every run of the pipeline. You can view the logs in Automation Ops™ and, because each pipeline run creates a job in Orchestrator, you can also view them in Orchestrator:

-

In Automation Ops™, hover over the right side of a pipeline, and then, from the Contextual Menu, select View Logs.

-

In Orchestrator, access the dedicated pipelines folder > Automations > Jobs. In the Source column, look for the Pipelines tag, and then select View Logs.

Removing webhooks manually

If the pipeline was set up to run for each commit during creation, then webhooks were automatically created in GitHub/Azure DevOps.

After deleting the runtime configuration, you should manually remove the webhooks if the Source Control link with the pipeline has already been removed. While not removing them would not affect the functionality of the CI/CD service, we recommened this step.

For each pipeline that has a trigger on commit and whose Source Control connection has been removed, you must access the GitHub/Azure DevOps repository and delete the webhooks after deleting the runtime environment.

If the Source Control connection has already been removed in your organization and that repository is currently connected to another UiPath organization, you could delete valid webhooks from the second organization. These must not be deleted, otherwise pipelines will not be triggered on commit.

Therefore, before deleting webhooks make sure that the current repository does not have a valid connection within a valid CI/CD runtime configuration in a UiPath organization.

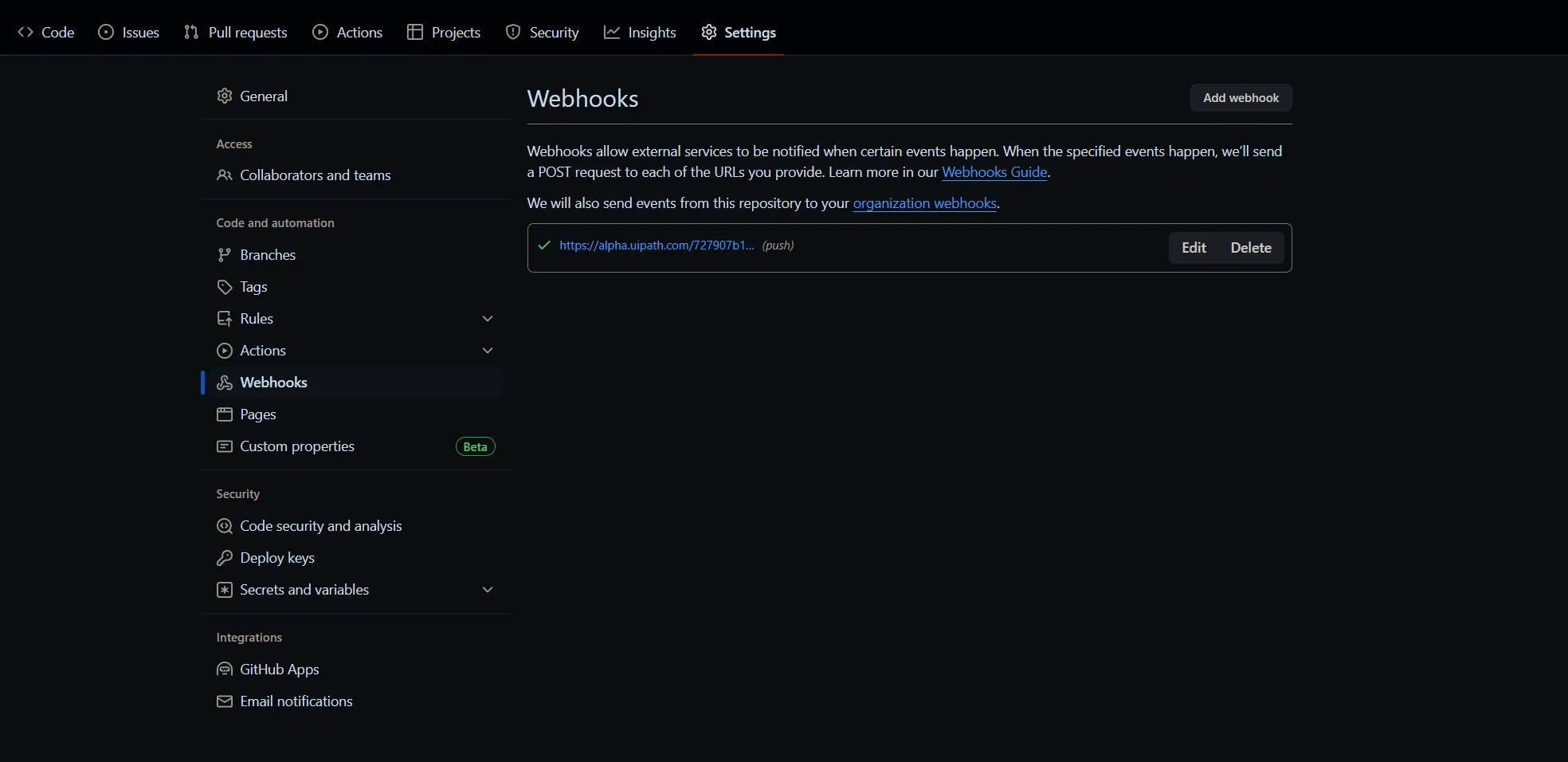

Removing webhooks from GitHub repository

To delete the webhooks from the GitHub repository:

-

Go to the Github repository and select Settings > Webhooks.

-

Delete all webhook URLs that end in

/roboticsops_/cicd_/api/webhooks/github/pipeline.

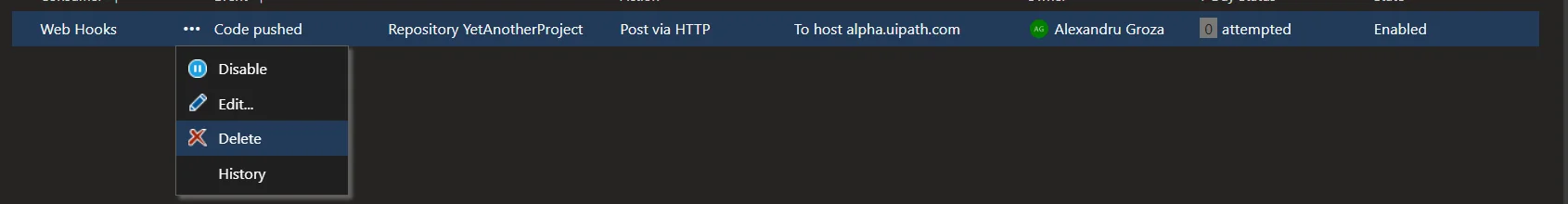

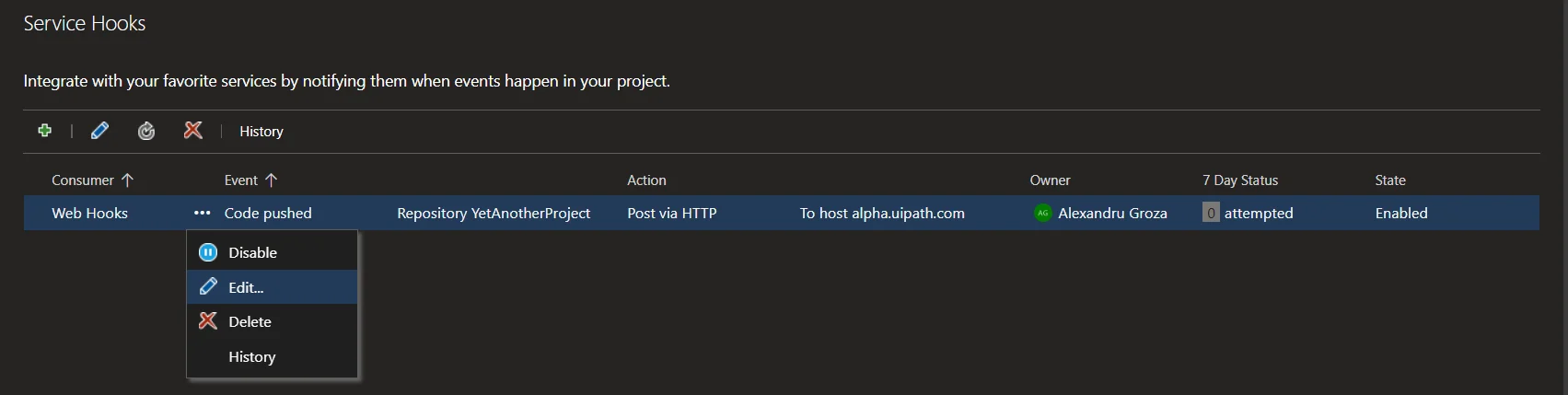

Removing webhooks from Azure DevOps repository

To delete the webhooks from the Azure DevOps repository:

-

Go to Azure DevOps repository and select Project Settings > Service hooks.

-

On the webhook to delete, select Edit.

-

Make sure that the webhook URL ends in

/roboticsops_/cicd_/api/webhooks/azure/pipeline.

-

Delete the webhook URL.