- Getting started

- UiPath Agents in Studio Web

- UiPath Coded agents

Agents user guide

Agent traces

About traces

Traces are detailed records of everything an agent does during a run, including steps taken, data processed, decisions made, and results generated. Each trace captures a complete timeline of the agent’s behavior, including timestamps, errors, inputs/outputs, and contextual metadata. Use traces for:

- Debugging and troubleshooting: Identify exactly where an agent failed or behaved unexpectedly.

- Performance analysis: Evaluate latency, errors, and throughput across agent runs to optimize behavior.

- Compliance and auditing: Maintain a verifiable record of what the agent did, when, and how — essential for audits or regulated workflows.

- Continuous improvement: Use trace insights to fine-tune agent logic, adapt behavior, or train new models.

The following table outlines common use cases where trace visualization can enhance your ability to debug, analyze, and optimize agent behavior. Each example highlights how trace data helps uncover insights and drive better decision-making during development and runtime monitoring.

| Use case | What traces help you do |

|---|---|

| Agent fails during tool call | Find and inspect the exact step, inputs, outputs, and error |

| Performance is slow | Use timestamps to locate bottlenecks |

| Investigating a spike in errors | Filter runs by status and trace the pattern |

| Verifying a production fix | Replay the original run and confirm the issue no longer occurs |

| Preparing an audit report | Export or review traces that show decision paths and data handled |

Trace types

Traces come in two distinct types, each serving a specific purpose in understanding and analyzing agent behavior:

- Agent run traces: These traces capture the step-by-step execution of an agent during a live or scheduled run. They show how the agent processed data, invoked tools, handled conditions, and responded to different states in real-time.

- Evaluation run traces: Evaluation traces are generated when an agent is tested against predefined inputs, typically during model evaluations, scenario validations, or test cases. These help assess agent accuracy, decision quality, and behavior under controlled conditions.

Accessing traces

You can access both types of traces from two key locations:

- Agent Builder – While designing or testing your agent, traces are available directly in the builder:

- The bottom panel opens automatically to the Execution Trail tab when you run your agent, showing live traces for the current run. You can also switch to the History tab to view past runs and add them directly to evaluation sets.

- The Evaluations and Output tabs provide another view into recent runs, where you can inspect behavior and results alongside your agent definition.

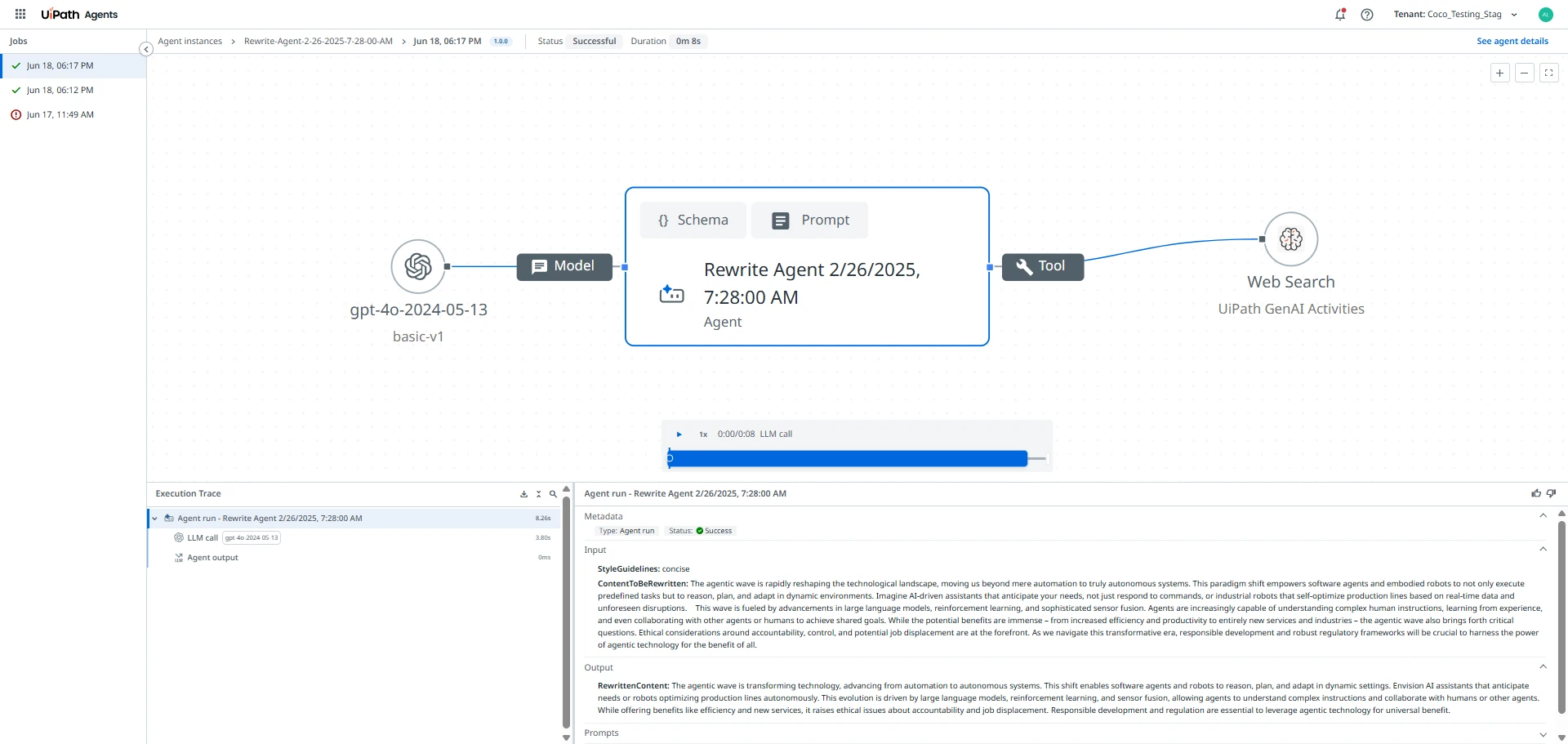

- Agent Instances page – Navigate to the Agents > Instances section. From here, select a specific agent and select any run to open its trace view, which includes the full visual trace and log panel.

When viewing traces for either agent runs or evaluation runs, you gain visibility into the agent’s execution. You can:

- See execution outcomes, as indicated with color-coded nodes: success, failure, or retry.

- Hover over any node to preview: start and end timestamps, execution status, input and output snippets.

- Select a node to view the details, including: complete JSON payloads, logs and errors, runtime metrics, (token usage, latency).

Managing access to trace data

This section outlines how administrators can configure access to trace data using the role-based access control model.

To view trace logs you need the following permissions:

Logs.ViewJobs.View

For details on default roles permissions, refer to Default roles.

The following matrix explains the visibility outcomes based on permissions combinations. These combinations define what trace details you can view depending on your role-based permissions.

Logs.View | Jobs.View | Access result |

|---|---|---|

| Enable | Enable | All attributes |

| Enable | Disable | All attributes |

| Disable | Enable | Partial attributes (e.g., name, type) |

| Disable | Disable | No access |

When you lack the necessary permissions to view trace data, you see a message that explains whether access is fully or partially limited and provides guidance to request the required permission.

Feedback on agent runs (Preview)

Feedback is a critical mechanism for interpreting and improving agent runtimes. It enables you to review behavior, diagnose issues, and document meaningful patterns in how an agent makes decisions.

Beyond debugging, feedback acts as the core input to feedback-based episodic memory, allowing the agent to refine its decision policy gradually—without requiring full prompt rewrites for every adjustment.

The relationship between feedback and memory

While feedback acts as an annotation tool, its most powerful application is influencing episodic memory.

Providing feedback on a trace highlights behaviors the agent should replicate or avoid in future runs.

- Evolution over repetition: Unlike static resolutions, feedback-based memory allows the agent's behavior to improve over time. The agent learns to recognize patterns flagged as correct or incorrect.

- Targeted improvement: This approach is most valuable in flows where the agent is frequently "almost right" or where the decision policy is still developing.

- Selective memory: Not all feedback automatically becomes memory. You must actively determine which annotations represent high-value learning opportunities, to prevent low-quality or inconsistent feedback from degrading performance.

Where to apply feedback

You can provide feedback on any span within an agent trace. This flexibility allows you to annotate specific tool calls, guardrail checks, or LLM outputs when reviewing or diagnosing behavior.

Only feedback applied to the agent run span is eligible for episodic memory. While you may annotate any part of the trace for analysis, only feedback attached directly to the agent run span will be stored and retrieved as memory in future runs.

When to apply feedback

While providing feedback on all traces would maximize iterative learning, in practice you should concentrate on traces that offer the highest value for optimization.

Focus on the following scenarios:

- Critical scenarios: Traces involving high-stakes decisions or high-impact errors.

- Recurring patterns: Areas where the agent consistently struggles or exhibits repetitive faults.

- Difficult decisions: Instances where the agent faced a complex choice.

- Negative sentiment: Runs that resulted in a poor user experience.

- Model behavior: Examples that clearly illustrate a specific behavior you want the agent to strictly copy or strictly avoid.

Applying feedback is crucial for continuous improvement. It allows you to encode better behaviors incrementally, making agent runs more reliable and consistent.

- Prioritize traces: Focus on traces from critical scenarios, high-impact errors, or recurring patterns where the agent struggles.

- High-value scenarios: Prioritize runs that represent difficult decisions for the agent, show negative user sentiment, or clearly illustrate a behavior you want the agent to copy or avoid.

- Focus areas: Clearly identify what you are providing feedback on:

- Output: Did the final result meet expectations?

- Plan execution (trajectory): Did the agent perform the task steps in the expected order?

- Comments: Use comments to enrich the feedback and inform memory retrieval.