- Getting started

- UiPath Agents in Studio Web

- UiPath Coded agents

Agents user guide

Managing UiPath agents

The Agents page in Automation Cloud serves as your centralized registry and observability hub for all UiPath agents. Use it to monitor performance, test conversational behavior, manage shared resources, and maintain visibility across your deployed ecosystem.

From a single location, you can:

- Monitor deployed agents and review performance metrics in real time.

- Test conversational agents before and after deployment.

- Investigate and resolve agent incidents or degraded health conditions.

- Access and deploy agent templates from the UiPath Marketplace.

- Manage Context Grounding indexes.

This centralized hub allows real-time monitoring of all agent runtimes, with alerts for errors, health score degradation, and poor runtime evaluation scores.

Terminology

- An Agent instance is a single deployed agent within a running business process, represented as a task in BPMN, being the live execution of an agent runtime that responds to specific triggers, queues, or other workflow conditions.

- Agent instance management represents the set of tasks related to monitoring, maintaining, improving, and terminating agents and agent jobs.

Key features

Here are the key features of the redesigned Agents page in Automation CloudTM:

- Real-time agent monitoring using BPMN workflows.

- Error handling and recovery through BPMN-defined events.

- Runtime agent coordination between agents and human operators.

- Merging and consensus handling via decision-making gateways.

- Performance analysis and optimization based on multiple metrics.

- Governance and compliance checks ensuring adherence to enterprise rules.

Overview

The Overview tab provides a high-level summary of your agent ecosystem, consolidating operational insights, performance indicators, and consumption analytics into one centralized view. It acts as a command center for understanding how agents are performing across your organization and how efficiently they are consuming resources.

Performance insights

The tab aggregates runtime data across all deployed agents to help you monitor performance trends and identify optimization opportunities. Key metrics include:

- Total jobs: Tracks the total number of completed agent executions, providing a measure of activity and adoption.

- Success rate: Measures the percentage of successful agent runs to help you evaluate reliability and detect recurring errors or instability in agent configurations.

- Average response time: Indicates how quickly your agents are able to complete tasks, letting you monitor latency and efficiency at scale.

- Agent unit consumption: Quantifies total unit usage across all agents, enabling better forecasting, budgeting, and resource allocation.

Monitoring and feedback analytics

The tab also surfaces consumption and feedback trends across your environment:

- Agent unit consumption reports identify the most active or costly agents, helping prioritize tuning or resource allocation.

- Feedback summaries highlight which agents receive the most user interactions or performance ratings—helping teams focus on improving user experience and impact.

Filter and time-based analysis

Administrators can analyze data across various dimensions, such as agent type, deployment state, or time range, to identify anomalies and evaluate trends over defined operational periods (for example, daily, weekly, or monthly views). These filters enable targeted analysis and streamline reporting.

- Manage your deployed agents and monitor their performance.

- Manage and monitor your early adopter agents.

Deployed agents

The Deployed agents tab provides a consolidated view of all agents active in production, along with real-time performance, health, and feedback metrics. It acts as the primary workspace for monitoring operational efficiency, diagnosing issues, and ensuring that deployed agents deliver consistent, high-quality outcomes.

Unified agent visibility

This view aggregates all production-ready agents across your tenant, allowing administrators and developers to quickly assess operational status and recent activity. Each agent card summarizes key operational metrics, including:

- Success rate – The percentage of successful runs, helping you identify degraded or underperforming agents.

- Average response time – A measure of execution speed and efficiency across recent jobs.

- Feedback volume – The number of user or system evaluations collected for the agent.

- Health indicators – Visual cues (for example, Healthy, Degraded) reflecting overall performance trends and runtime stability.

Agents can be filtered by state, type, folder, or time range, enabling precise monitoring across business areas or deployment environments.

Operational insights and performance tracking

The tab provides contextual analytics that extend beyond simple runtime status. You can explore:

- Active and completed jobs – Separate timelines track live executions versus completed runs, helping identify workload peaks and bottlenecks.

- Error and latency patterns – Performance charts display execution errors, time-to-first-response, and latency percentiles, allowing teams to fine-tune responsiveness.

- Feedback trends – Aggregated sentiment analysis (positive vs. negative) shows how agent quality evolves over time and which updates affect user satisfaction.

These insights help diagnose systemic issues, validate new deployments, and prioritize optimization efforts.

Instance management charts are unavailable when CMK encryption is enabled for traces. Because trace attributes are encrypted in Insights and therefore cannot be queried, the analytics required to generate these charts cannot run.

Usage and consumption analysis

The dashboard highlights how agents interact with their environments and how resources are consumed:

- Agent unit usage – Tracks total consumption and identifies outliers in cost or resource utilization.

- Top capabilities invoked – Lists the most frequently used actions (tools, activities, or indexes) across agent runs, helping teams understand behavior patterns and common dependencies.

Detailed agent runtime view

Selecting an individual agent opens a detailed analytics workspace, combining:

- A runtime diagram that maps the agent’s logic, tools, and memory sources.

- Health score visualizations that assess prompt design, tool integration, and evaluation coverage.

- Execution traces with granular visibility into every run (inputs, outputs, errors, and timing details), allowing developers to reconstruct and debug the agent’s reasoning process.

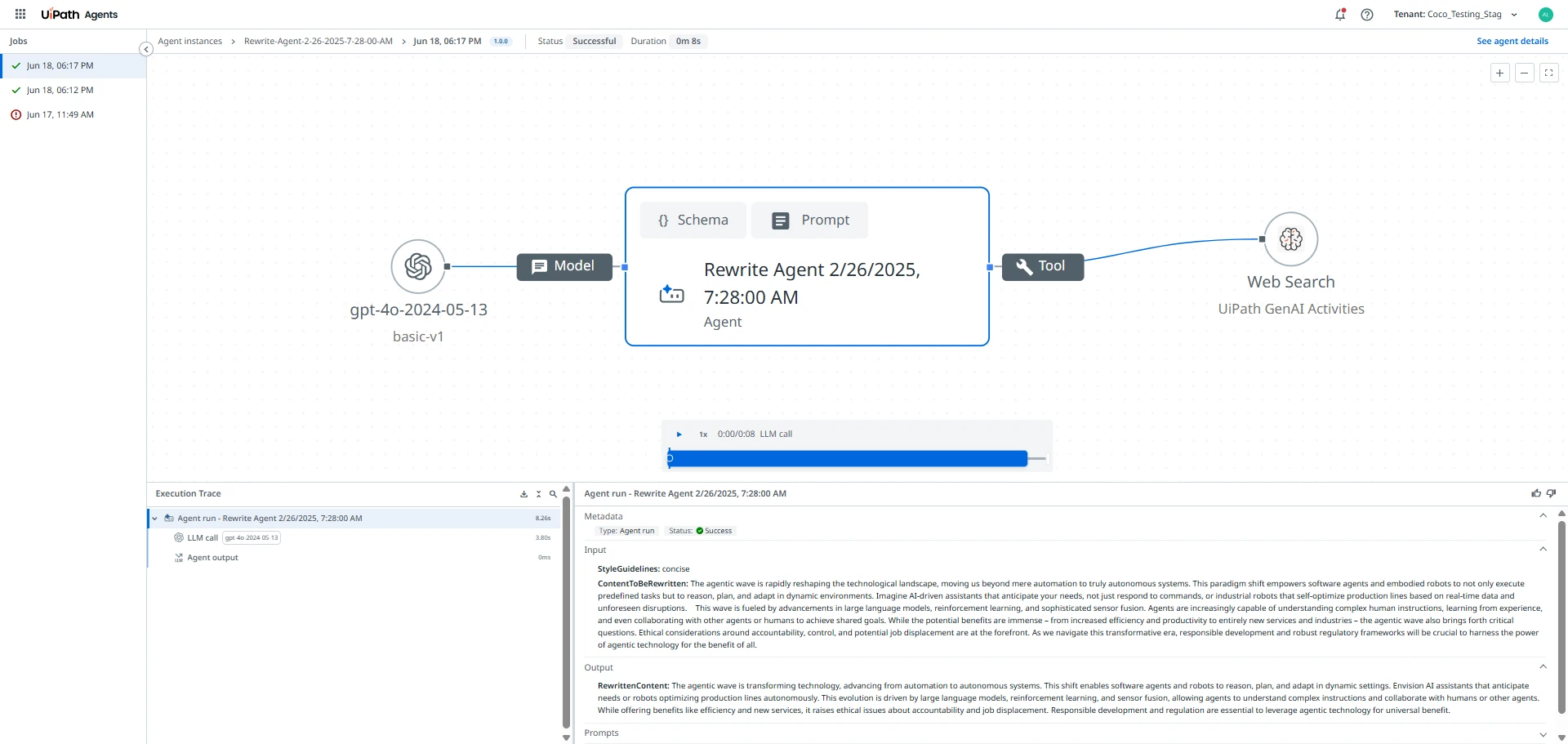

The agent instance view provides full observability into individual agent executions, offering a complete trace of decisions, actions, and performance metrics within a single run.

Understanding the trace view

The trace view combines a graphical representation of the agent’s logic with time-aligned execution data, letting you inspect the flow and outcomes of each step. The canvas shows nodes representing major agent components like models and tools, connected in sequence.

Once you're in the Trace view of an agent run, follow these steps to interact with and investigate the trace data:

- Preview node information.

Hover your mouse over any node on the canvas to see a tooltip that includes:

- The execution status (success, retry, or failure)

- Start and end timestamps

- A preview of the input and output data This is helpful for getting a quick sense of what happened at each step without needing to open the full details.

- View full node details.

Select any node to open a detailed panel which includes:

- Complete input and output payloads (JSON)

- Execution logs or error messages (if applicable)

- Runtime metrics, such as latency and token usage

- Configuration parameters, if available

- Navigate between related steps, to explore how information flowed through

the agent:

- Select connected nodes to move upstream or downstream.

- This helps you trace how decisions, inputs, or outputs from one step influenced the next.

- Use the Execution

Trace panel. This panel shows a chronological list of all recorded

actions during the agent run.

- Select a row in the trace log to highlight the corresponding node on the canvas.

- Similarly, selecting a node on the canvas automatically highlights the relevant row in the trace log.

Conversational agents

The Conversational agents tab provides a complete environment for managing, testing, and analyzing agents that interact with users through natural language dialogue. It brings together design-time testing, live chat evaluation, runtime insights, and feedback analytics to help teams continuously improve conversational performance and reliability.

Conversational agents can be tested directly within the chat interface. This embedded playground lets you simulate real-world exchanges and evaluate how the agent handles intent recognition, turn-taking, and context continuity. You can:

- Engage with the agent in real-time, sending natural language prompts and observing live responses.

- Test prompts or craft custom messages to assess coverage across scenarios.

- Evaluate how the agent applies tools, memory, and models during a conversation loop.

This testing environment helps validate conversational logic before deployment, ensuring accuracy and tone align with intended use cases.

The Runtime view provides end-to-end visibility into how conversational agents perform in production, capturing live sessions, completion rates, and user interactions. It tracks key operational metrics, such as response time, duration, and agent unit consumption, to ensure performance, scalability, and reliability across workloads.

The Trace view enables debugging and optimization by mapping the full reasoning and execution flow of an agent. You can inspect decisions, tool calls, governance checkpoints, and performance heatmaps to understand behavior, validate logic, and enhance overall conversational quality.

The Feedback view aggregates user and system evaluations into a central dashboard. It visualizes sentiment trends, highlights recurring issues, and links feedback to individual runs for deeper context. This enables iterative fine-tuning and continuous quality improvement.

Draft agents

The Draft agents tab contains unpublished or in-progress agents being developed.

Each draft is displayed as a card that includes:

- The agent name (or "Untitled", if not yet renamed).

- The last updated timestamp.

- A quick link to reopen the draft in Studio Web for further editing.

Draft agents can be resumed, edited, or published once finalized.

Context Grounding indexes

The Context grounding indexes tab enables you to monitor the status and health of your indexes and track their ingestion progress at the tenant level.

The Index Monitoring section provides graphical insights into the following metrics:

- Queries: Tracks the number of queries performed on your indexes over a selected time interval.

- Index jobs: Displays the number of completed ingestion jobs. If no jobs have run in the selected period, a placeholder graphic is shown.

The Indexes table lists all available indexes in your tenant. For more granular information on indexes, select an index from the table. The individual view provides the following information:

- Index name, Folder, and Data source

- Health status, indicating whether an index is Healthy or Degraded

- Storage size, Ingestion status, Last sync, and Last queried timestamps

Select an index from the table to open its details page. The Index details view provides:

- Queries during the selected interval.

- A Health chart showing the overall health score and the two component criteria.

- Ingestion history, listing completed, failed, skipped, or deleted sync runs.

Use this view to investigate degraded indexes, review ingestion reliability, or check when an index was last queried.

Index health score

The Index health helps you monitor the condition of your Context Grounding indexes and identify those that may need maintenance or cleanup. It provides an automated, objective score showing how well each index performs and how frequently it’s used.

Each index receives a health score based on two criteria:

- Ingestion reliability – How successfully documents were processed during the latest ingestion run.

- Utilization – How recently the index has been queried.

The combined score helps you determine whether an index is healthy and delivering value (overall score above 75%), or degraded (overall score 75% or below). The score updates automatically after each successful ingestion or query.

Index health is recalculated every 15 minutes for all tenants and only applies to indexes with completed ingestion jobs. New indexes have a 24-hour grace period after creation before health scoring begins.

The following table describes how each health criterion is calculated and weighted to determine the overall index health score.

| Criterion | Metric | Formula | Notes |

|---|---|---|---|

| Ingestion reliability | Success rate from last ingestion | 100 × (1 - failed_docs / total_docs) | Skipped and deleted documents are excluded. If no ingestion is completed within 24h, score = 0. |

| Utilization | Days since last query (max 90 days) | 100 × (1 - days_since_last_query / 90) | After 24h without any query, score = 0. |

| Overall health | Average of both metrics | (Ingestion Reliability + Utilization) | Calculated only if both component scores exist. |

You can review the health status in two places:

- The Context Grounding Indexes list – The Health column shows a colored indicator (green for Healthy, red for Degraded). Hovering over it displays details for each dimension (Ingestion reliability and Utilization).

- The Index details view – The dedicated Health chart displays the overall score and component metrics. You can also track ingestion history and investigate issues when reliability is low.

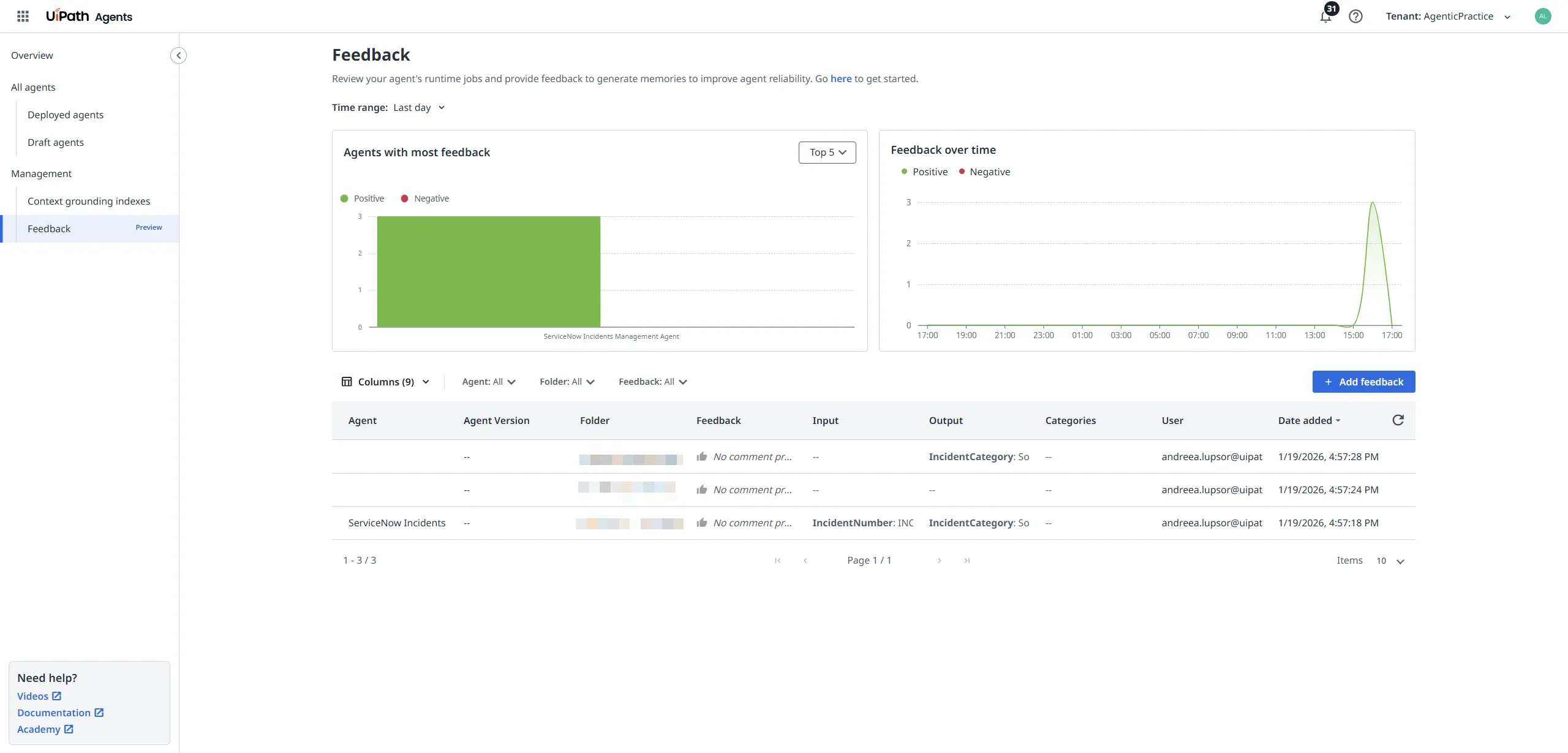

Feedback tab (Preview)

The Feedback tab provides a centralized view for reviewing agent traces and capturing feedback that helps improve agent reliability over time. It connects runtime analysis with feedback-driven episodic memory, enabling agents to gradually refine their decision-making without constant prompt rewrites.

Feedback serves two complementary roles:

- Runtime analysis and diagnostics: Review completed agent runs to understand behavior, identify issues, and annotate specific decisions or outputs.

- Input to episodic memory: High-quality feedback can be promoted to episodic memory, allowing the agent to repeat successful behaviors and avoid incorrect ones in future runs.

Feedback overview

The top section of the page provides aggregated insights:

- Agents with most feedback shows which agents receive the highest volume of positive and negative feedback.

- Feedback over time visualizes feedback trends, helping you correlate behavior changes with improvements or regressions.

- Time range filters let you narrow the analysis to recent or historical runs.

Figure 3. The Feedback tab in Agents Instance Management

Adding feedback to agent runs

To provide feedback:

- Select Add feedback from the Feedback page.

- Choose an agent runtime job from the list of executed jobs.

- Review the trace and attach feedback to the relevant span.